Why?

I like data and cannot lie

Summary

Objective: Find new cafes around Montreal to try out

Problem statement: Finding cafes randomly can be tedious, and blog sites often repeat known cafes.

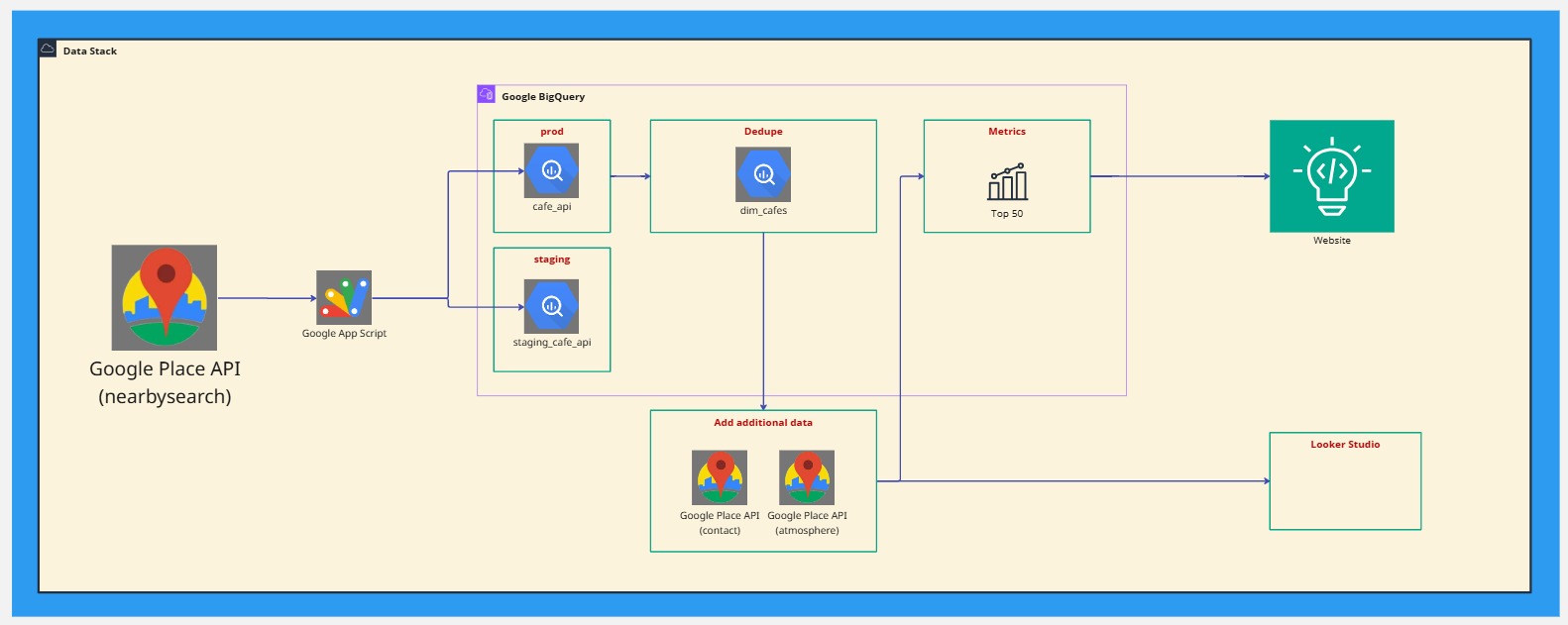

Solution: Using Google Place API with a modern data stack to fetch and filter out cafes to an updating list of cafes based on Google Reviews and recent review activity to find trending places.

Tools Used

Google Sheet, App Script, BigQuery, Google Place API, ChatGPT/Gemini, Cloudflare

MVP

To validate that my idea would work, I started with what I know best: Google Sheets and Apps Script. I had to create a new API Key within my GCP with access to the Google Place API, plus a few geo functions as I needed to determine the latitude and longitude for my search.

API challenges

Google Place API has two important restrictions that made it hard to search for all cafes in the city.

- Limit of 60 results per API call

- Limit of 5 reviews per place

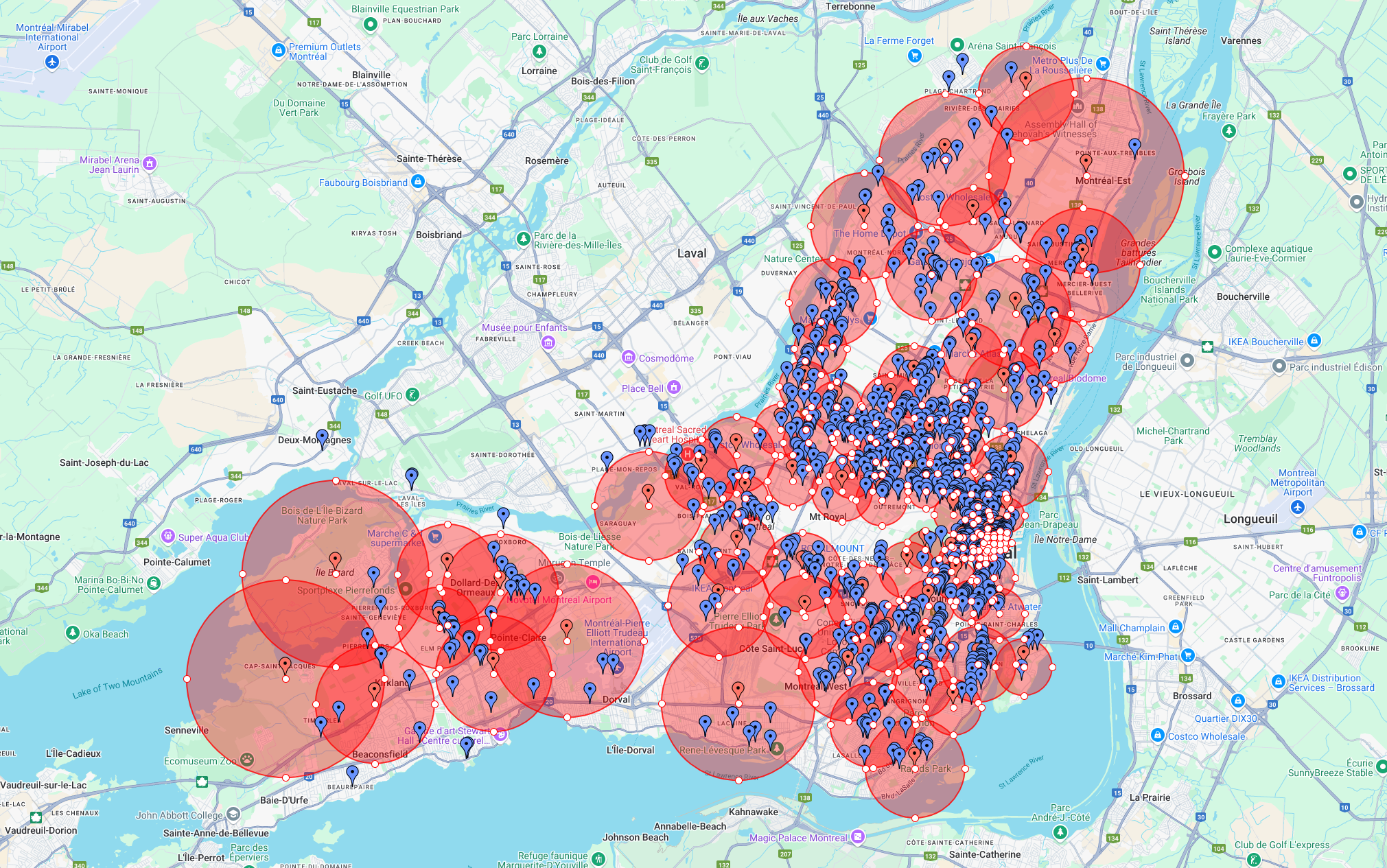

Initially, I focused API calls on individual neighborhoods using text search. Even then, some areas were too large. Switching to Forward Sortation Area (first 3 characters of postal codes) with custom radius helped stay under limits, producing ~650 cafes. Downtown dense areas required multiple smaller grids (250m radius) and deduplication downstream.

For reviews, since only 5 per place were accessible, I fetch daily snapshots to accumulate data over time. This helps track trending places by short-term review spikes.

Map

To visualize the search grid coverage, I plotted existing data with lat/long and search radius.

Billing challenges

Accidentally exceeded the free tier twice while testing, requiring minor payments and a Google billing adjustment. Switching from Google Maps to Leaflet reduced billing risk while retaining visualization capabilities.

Data Flow

[WIP] Data architecture